Large Language Models (LLM) and GenAI unblocked various capabilities that were fiction a few years ago. Today we are going to talk about automating various mundane infrastructure management tasks, whether it is databases, operating systems or clouds.

Agents 101

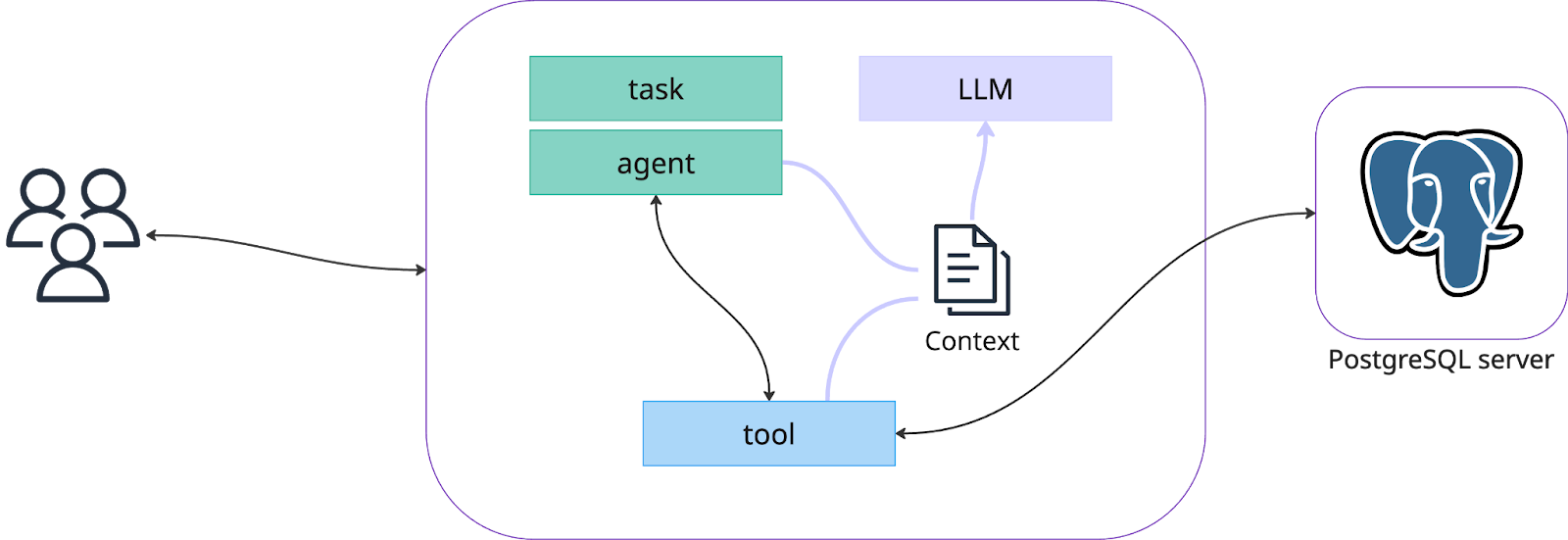

The beauty of agents is that they can get fuzzy inputs and produce more or less deterministic outputs. More or less depends on how you configure those. The hard working engine behind Agents are LLMs, but the secret sauce is Tools that Agents use to fetch or change something in other systems, and enrich the context with tools results.

Building your first agent

All the code provided in this blog post can be found in this github repository: spron-in/blog-data/ai-agents-infra

You can build an agent yourself, but for starters it is better to start with some framework. There are a bunch: AutoGen from Microsoft, smolagents from HuggingFace, CrewAI, and more.

I will be using CrewAI today, but all these frameworks follow the same patterns, hence adjusting the code is an easy task.

Our goal will be to have a PostgreSQL agent that can answer questions about the database without us needing to connect to it.

In our project we are going to have the following files:

Find full code in the repo, I will highlight only important stuff here.

agents.py

This file creates a backstory for our agent and picks the tools that this agent can use.

postgresql_reader_agent = Agent(

role="AI Database Reliability Engineer with PostgreSQL expertise",

goal="Troubleshoot PostgreSQL database issues and find the answer to the user questions",

backstory=(

"You are a professional who is involved in very complex PostgreSQL "

"..."),

llm=llm,

verbose=True,

allow_delegation=False,

tools=[postgresql_tool],

)As you can see, we allow the agent to use postgresql_tool.

tasks.py

Here we define the expectations: both what agent should expect as an input, and what kind of behavior we expect from the agent. I have quite a lengthy prompt there, but I want to highlight how I instruct the agent to run read-only queries only:

"""

You only have read-only permissions to the database by default. You should not execute destructive commands like

DROP, DELETE, or UPDATE unless explicitly requested and confirmed by the user. Focus primarily on SELECT queries for

diagnostics and analysis.

"""tools.py

Tools are functions and they expect specific inputs and provide deterministic outputs. In the tool I describe what kind of inputs Agent must provide:

from pydantic import BaseModel, Field

class PostgreSQLCommandInput(BaseModel):

"""Input for executing PostgreSQL commands securely."""

command: str = Field(..., description="The SQL command to execute (e.g., 'SELECT * FROM users LIMIT 10').")

host: str = Field(..., description="The PostgreSQL server host address.")

port: int = Field(5432, description="The PostgreSQL server port (default: 5432).")

user: str = Field(..., description="The PostgreSQL username.")

password: str = Field(..., description="The PostgreSQL password.")

database: str = Field(..., description="The PostgreSQL database name to use.")

class PostgreSQLTool(BaseTool):

name: str = "PostgreSQL Command Executor"

description: str = "Executes PostgreSQL commands securely and returns the results in a readable format."

args_schema: Type[BaseModel] = PostgreSQLCommandInput

# tool code belowNotice the detailed descriptions - they help the Agent to understand the expectations. Using pydantic is highly recommended as it strictly defines the type of the variables and allows you to describe the structure properly.

Run 01_crew.py

Now let’s run the crew. Note how I provide the credentials: they can be in any random format, LLMs will be able to decipher those to use in a tool.

assets = [

{

"type": "postgresql",

"host": "MASKED",

"port": "5432",

"user": "aiuser",

"database": "aitestdb",

"password": "MASKED"

}

]When you run `python 01_crew.py` you will see the detailed output of how the agent is thinking and using the tools. In the end, you will see a final response:

python 01_crew.py "perform a health assessment of my database"

...

The PostgreSQL database 'aitestdb' on server MASKED:5432 is running PostgreSQL version 16.8. Database statistics show some activity with commits, rollbacks, and data access. There's one active connection from client address 10.15.34.2. The database currently contains only system tables within the pg_catalog and information_schema schemas, indicating a potentially new or system-level database. Background writer statistics show checkpoint and buffer allocation activity.See a full thought process in github repo: outputs/01_crew_output.txt

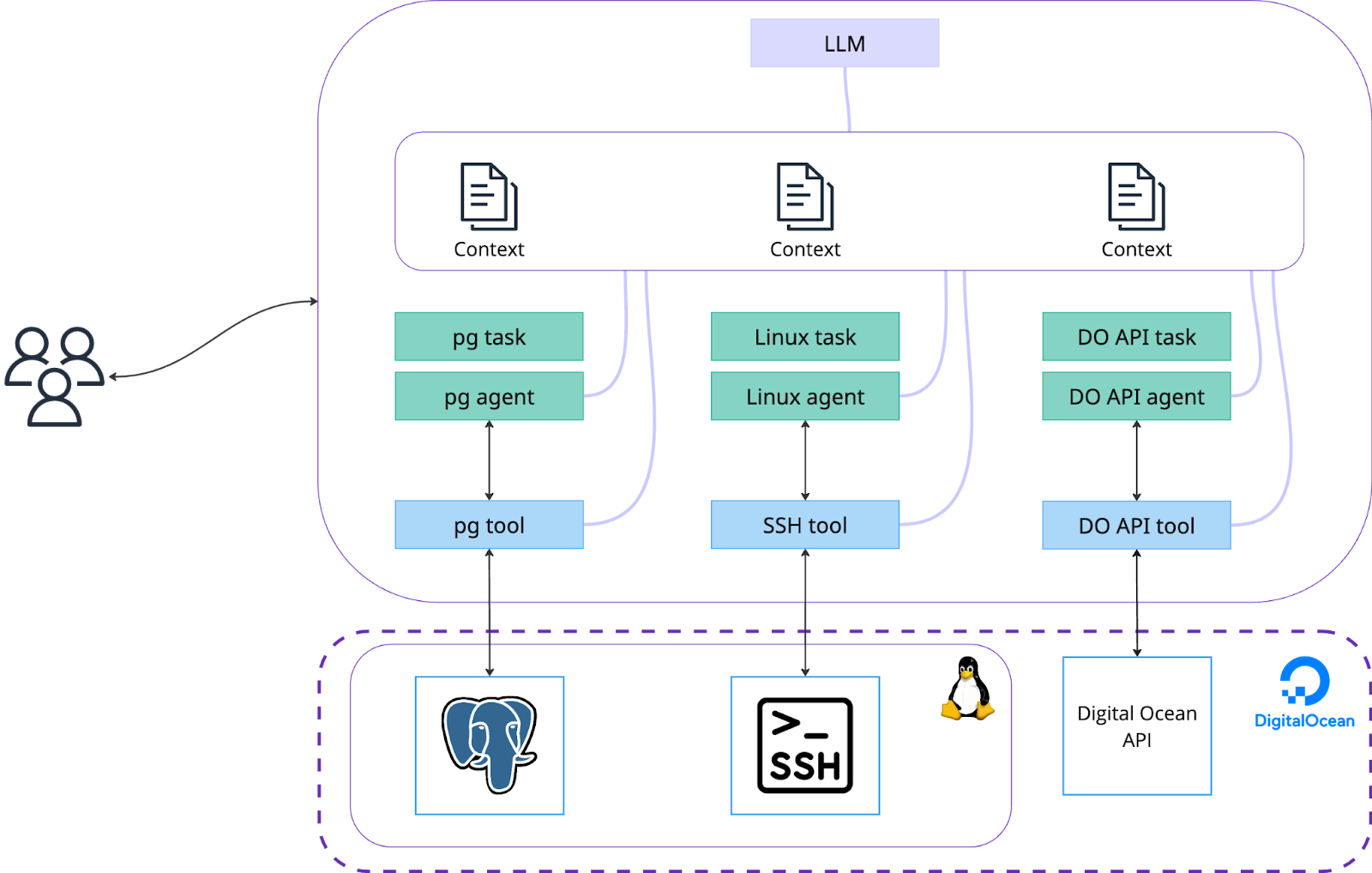

Agents in the world of complexity

Today's modern infrastructure is complex. Databases do not run in silos - there are applications, servers, monitoring systems, microservices and more. For the agentic world all this means more agents and more context.

My PostgreSQL server runs on a single virtual machine in Digital Ocean (DO). This means that our agentic system can get more data from a Linux server itself and Digital Ocean’s monitoring system via an API. Doing that - raises the awareness of our agents: they know more and provide deeper insights.

agents.py

We add two more agents - one for Linux, one for DO’s API. DO agent has two tools - one for interacting with API, another one for learning about API endpoints from the specification. We sometimes can’t assume that LLM knows everything about all the APIs.

tasks.py

We have two new tasks now - one for Linux and another for Digital Ocean integration via API.

tools.py

In tools we add few new tools:

- SSHTool - it can run commands on a linux server through SSH connection

- DigitalOceanAPITool - run requests against DO API endpoints

- DigitalOceanEndpointSearchTool - search DOs API specification for specific endpoints. We can’t assume that LLM knows all the endpoints. Look at it as a form of Retrieval Augmented Generation (RAG).

Run 02_crew.py

python 02_crew.py "perform a health assessment of my database"

...

Based on the information I have, here's a health assessment of your database:

**Database Server (do_test_server - MASKED):**

* **General Status:** The server appears to be running and accessible.

* **Resources:** The server is a DigitalOcean droplet ("test-ai-agents") with 1GB of RAM, 1 vCPU, and 25GB of disk space.

* **Monitoring:** A CPU usage alert is configured, triggering if CPU usage exceeds 70% for 5 minutes.

* **Operating System:** Ubuntu 20.04 (LTS) x64.

* **Networking:** Public IPv4 and IPv6 addresses are assigned. Private networking is enabled.

...and more...See a full thought process in github repo: outputs/02_crew_output.txt

The file is really long and has multiple calls of tools to fetch the information from linux and Digital Ocean. You can also see that some commands fail and the agent just proceeds adding this information to context.

Random notes

Agents read, agents do

As you might have noticed, my agents have instructions to execute read-only operations. It is possible to allow agents to execute various commands that trigger changes, but it is advisable to start with agents that ask for approval to run the commands. Once you get to the level that agents are proposing the changes that can’t harm your systems, switch to fully autonomous agents.

Agents vs tools

As you see I have 3 different agents using 4 different tools. The question arises - why not just one generalistic agent that can use all 4 tools. It can be done, but not recommended. Mostly because your prompt is going to grow like a snowball with various “ifs”, “dont’s” and other limitations.

Conclusion and looking ahead

LLMs are imperfect. There are still hallucinations, relatively small context windows, and questionable quality of training data. But even with these imperfections there is a lot of opportunity to become more efficient and then to automate some of the tasks completely. Tasks that we thought are not possible to automate due to a lot of variables, changing parameters and fuzziness in general.

We will see more and more agents appearing. They will be dumb at first, but get better with every iteration and new breakthrough in AI development. The future of infrastructure management is intelligent, adaptive, and proactive. As we continue to refine these agentic systems, we're not just building tools; we're building partners. Partners that can learn, adapt, and evolve alongside our ever-changing needs. The potential is limitless, and the journey is thrilling.